ICEBERG Version 1 Release Notes

Web-site: http://iceberg.cs.berkeley.edu/release1/Download: http://iceberg.cs.berkeley.edu/release1/iceberg1.tar.gz

Contact: iceberg-devel@iceberg.cs.berkeley.edu

Sun Jun 24 16:05:34 PDT 2001

|

ICEBERG Version 1 Release NotesWeb-site: http://iceberg.cs.berkeley.edu/release1/Download: http://iceberg.cs.berkeley.edu/release1/iceberg1.tar.gz Contact: iceberg-devel@iceberg.cs.berkeley.edu Sun Jun 24 16:05:34 PDT 2001 |

Telecommunications networks are migrating towards Internet technology, with voice over IP maturing rapidly. We believe that the key open challenge for the converged network of the near future is its support for diverse access technologies (such as the Public Switched Telephone Network, digital cellular networks, pager networks, and IP-based networks) and innovative applications seamlessly integrating data and voice. The ICEBERG Project at U. C. Berkeley is seeking to meet this challenge with an open and composable service architecture founded on Internet-based standards for flow routing and agent deployment. This enables simple redirection of flows combined with pipelined transformations. These building blocks make possible new applications, like the Universal Inbox. Such an application intercepts flows in a range of formats, originating in different access networks (e.g., voice, fax, e-mail), and delivers them appropriately formatted for a particular end terminal (e.g., handset, fax machine, computer) based on the callee's preferences.

The design of the ICEBERG architecture is driven by the following types of services:

To enable any-to-any communications, integrated communication systems also need a component that inter-works with various networks for signaling translation and packetization. In our control architecture, this component is called an ICEBERG Access Point (IAP).

Another important decision in our architecture design is to leverage cluster computing platforms for processing scalability and local-area robustness. Our control architecture requires processing scalability to a large call volume, continuous availability through fault masking, and cost-effectiveness. Clusters of commodity PCs interconnected by a high-speed System Area Network (SAN), acting as a single large-scale computer [NOW], are especially well-suited to meeting these challenges. Cluster computing platforms, such as [ Ninja ], provide an easy service development environment for service developers and mask them from cluster management problems of load-balancing, availability, and failure management. We plan to build the ICEBERG system on Ninja for these benefits. Our current release is built on the Ninja vSpace computing platform. vSpace is a service platform with event-based asynchronous communication between "workers". vSpace is built on top of SDDS (Scalable Distributed Data Structure). The documentation for this can be found here.

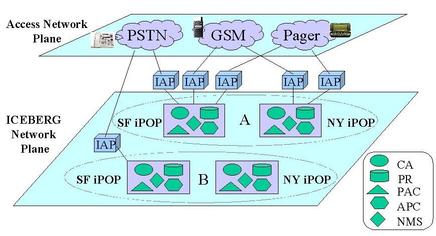

The following figure shows a high level view of how ICEBERG system could actually be used in the real world. The ICEBERG network plane shows two ICEBERG Service Providers representing two different administrative domains: A and B. The services they provide are customizable integrated communication services on top of the Internet. Similar to the way that Internet Service Providers (ISP) provide Internet services through the use of Points of Presence (POPs) at different geographic locations, ICEBERG Service Providers consist of ICEBERG Points of Presence (iPOPs). Both A and B have iPOPs in San Francisco (SF) and New York (NY). Each iPOP contains Call Agents (CAs) which perform call setup and control, an Automatic Path Creation Service (APC) which establishes data flow between communication endpoints, a Preference Registry (PR) for users' call receiving preference management, a Personal Activity Coordinator (PAC) for user location or activity tracking, and a Name Mapping Service (NMS) which resolves user names in various networks. iPOPs must scale to a large population and a large call volume, be highly available, and be resilient to failures. This leads us to build the iPOP on the Ninja cluster computing platform. The ICEBERG network can be viewed as an overlay network of iPOPs on top of the Internet.

You can find more details from the following papers:

ICEBERG v0, which was built on Ninja iSpace, emphasized on functionality rather than important system property such as scalability and robustness. Ninja iSpace, the underlying platform, emphasized on the programmability rather than cluster computing platform properties such as load-balancing and fail-over.

ICEBERG v1 intends to provide a full-fledged scalable and robust unified communication system which integrates heterogeneous communication networks and devices, such as the PSTN and the GSM. ICEBERG v1 (in particular, iPOP) is built on top of Ninja vSpace, which is a much more complete cluster computing platform that offers load-balancing and fail-over across clones of service instances. Ninja vSpace also provides a even-driven programming model, circumventing inefficient java thread and RMI implementation. Using Ninja vSpace meant that we had an entire re-implemention of ICEBERG.

In addition, ICEBERG v1 implements a full-fledged signaling protocol (see paper with IAPs sending soft-state refreshes and heartbeat to the serving clusters (where v0 only includes session maintenance). Both IAPs and Call Agents are call state machine-driven. Keep-alive messages from IAP also include the current state of the IAP state machine as well. Also, an application-level group service (ALGS, described in paper ) in place of IP multicast group service (used in v0). v1 also includes a new version of preference configurator, which allows callees to specify call receiving preferences. We maintain the philosphy that callee is the ultimate decision maker on receiving the incoming communication, though callers are allowed to indicate their wishes (this is not enabled in this release). The preference configurator reflects the underlying call state machine operation, and allows users to refine the state machine at a high level in a user-friendly way.

ICEBERG v1 also leverages the persistent state management on the cluster (the Distributed Data Structures - DDS), in particular, the distributed hash tables. Name mapping records and user call receiving preferences are entered and retrieved from these hash tables. Preference Registry and name mapping service are basically the frontend for distributed hash table of name mapping and preference data.

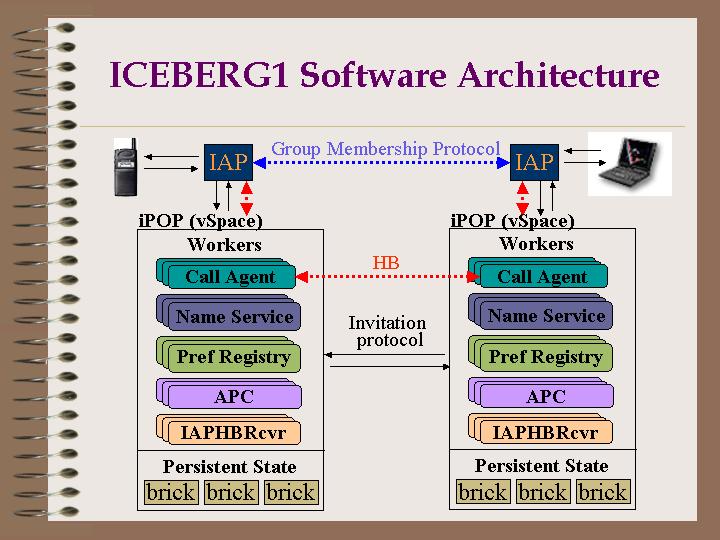

This picture depicts the iPOP software architecture on top of Ninja vSpace and its protocol interactions with one another, and with IAPs. A Ninja vSpace worker refers to a group of clones with each running on a different machine. Failover across the clones is managed by the Ninja vSpace. Bricks in the picture are distributed hashtable bricks which mainstains the state for service instances that last beyond a session.

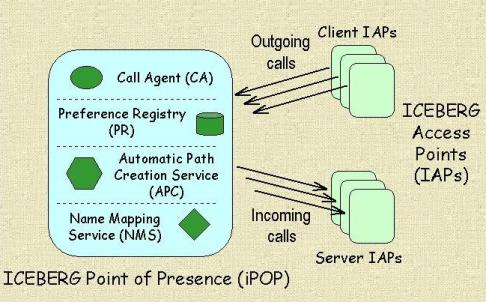

The following figures depict the components that are part of the release.

This figure shows the components of the iPOP that are in the release. The Call-Agent, Preference Registry, Name Mapping Service, and the Automatic Path Creation Service belong to the iPOP.

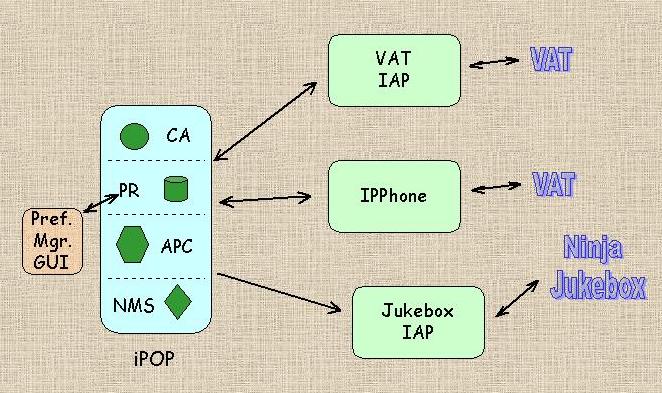

This figure shows the different IAPs that are part of the release. The preference manager is a GUI that can be used to specify call receiving preferences.

The available IAPs in this release are: